An opportunity for creative practice, or a threat?

AI is in the mainstream of collective conscience. And, as with most new technology, there is both excitement and trepidation around its use. Generative AI (GAI) is a subset of the umbrella term of AI and utilises Machine Learning (ML) to produce an output from human input. In GAI the goal is to reproduce the work that humans would produce more efficiently (Marr, 2023) and hopefully – if you are the computer scientist creating GAI – indistinguishable from the work of humans. The inputs used between the platforms is currently varied, some allow for text prompt, while other platforms will create music based on style and genre. Not all platforms allow for modification after they generate output.

I’m going to explore how GAI impacts music production with a series of real-world attempts to use the platforms to create music and objectively evaluate its potential or shortcomings.

As this research is practice based it was necessary to use the platforms. So, a playlist of the examples mentioned in this essay is available at:

Technology as a tool for creativity

Artists, from painters and their paintbrushes to musicians and their instruments, have always had a symbiotic relationship with technology.

History shows us that no matter what tools we are provided as artists and producers, we will find a way to utilize them in ways that are not always the intended use. For example, T-Pain used Antares AutoTune (Watch the Sound: AutoTune, 2021) to an extreme to modify his vocals and create his signature sound. He did this is so that it would sound different to anyone else out there (ibid.). In this way, a tool can be utilised creatively in ways that the original creator never intended.

As Brian Eno states, “The technologies we now use have tended to make creative jobs do-able by many different people: new technologies have the tendency to replace skills with judgement – it’s not what you can do that counts, but what you choose to do, and this invites everyone to start crossing boundaries.” (Eno,1996, p. 394). This way of thinking, I believe, can be a more helpful way to look at these advances in technology – the removal of barriers to creativity. For a more recent view Lee Gamble says how technology is evolving, “While neural networks are still ‘learning’ and improving, they’re still producing ‘the poor image’. If a technology becomes so good a replicating something, isn’t something lost? We have arrived at a technological endpoint with less possibility for interpretation.” (Gamble & Voix, 2023). These viewpoints on technology taken almost 30 years apart show that little has changed in relation to the idea of embracing the creative opportunities that technology can enable. Both points of view see technology as a tool for further exploration rather than an end point. As much in the way that the sampler inspired musicians to sample and remix the works of others, do we now see this affect in other emerging technologies, using these technologies to fuel further creativity. In this way we can look at GAI as another creative tool to kick start the creative process, or even inform decisions along the way.

Democratisation of creativity?

GAI for music production and composition is still new. Unlike GAI for images, which is quite advanced, I think that there is quite a way to go. To test this, I’m going to show a few examples of work that I have asked some AI platforms to make and objectively look at the results. I’ll also look at an example of what two experienced music producers created with current GAI platforms and their experience.

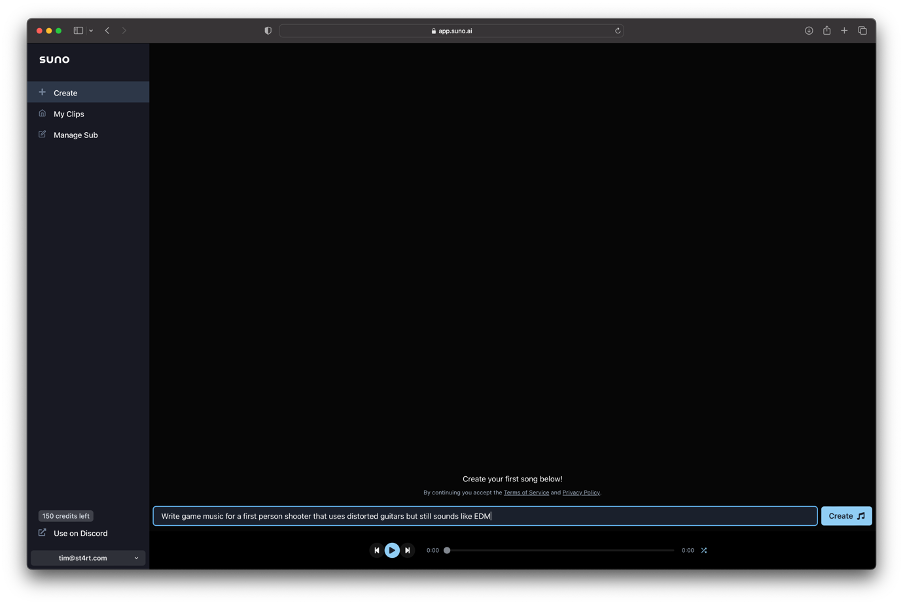

I first decided to try Suno.ai as it was mentioned in the Vergecast by Ian Kimmel, “Suno is kind of like the Midjourney for music.” (Pearce, 2023). As Mid Journey is a text input-based GAI like ChatGPT, I thought that this would be the most interesting in terms of the output generated.

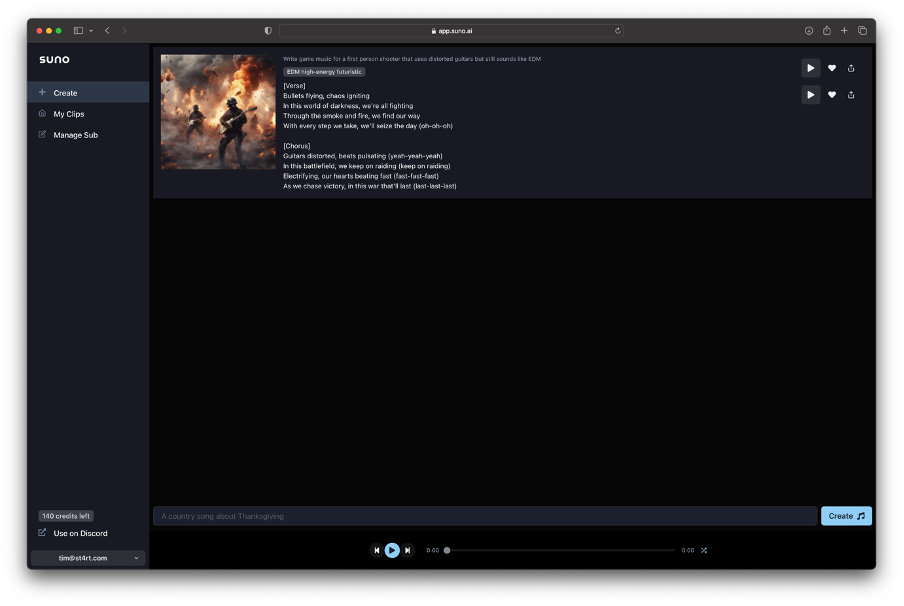

Is it any good? Well, considering that in two minutes you get the first verse and a chorus, with lyrics, and those lyrics are sung using an AI voice, and there are two versions, it is pretty mind blowing (Suno.ai: Version 1 – FPS | Suno.ai: Version 2 – FPS). I will excuse the terrible lyrics for a minute (“Bullets flying, chaos igniting”). But the thing that amazes me is how finished they sound. Even though the output is quite low fidelity, and there are some obvious glitches in the output, it is a lot better than I imagined. The voice doesn’t sound all that realistic, but in two minutes I couldn’t create something that finished. However, there are a few drawbacks. First, I cannot get it to generate another verse, or edit it to make it longer. There are no tools to do this on the platform. Interestingly, on Vergecast, Ian Kimmel and Charlie Harding had the same dilemma (Pearce 2023). They then used another AI tool to separate the finished track into its component parts, a stem separator, “Now we have the problem that we want these individual pieces of material to play with. So, we go to a website called AudioShake .AI and they will stem your pre-existing music for you.” (ibid.). The first track works for the genre that I was going for. But the second track it produced wouldn’t fit in a game well. For contrast, I was tasked to create a composition for Composing for Media for an FPS game and this is what I created (FPS – Project – V2).

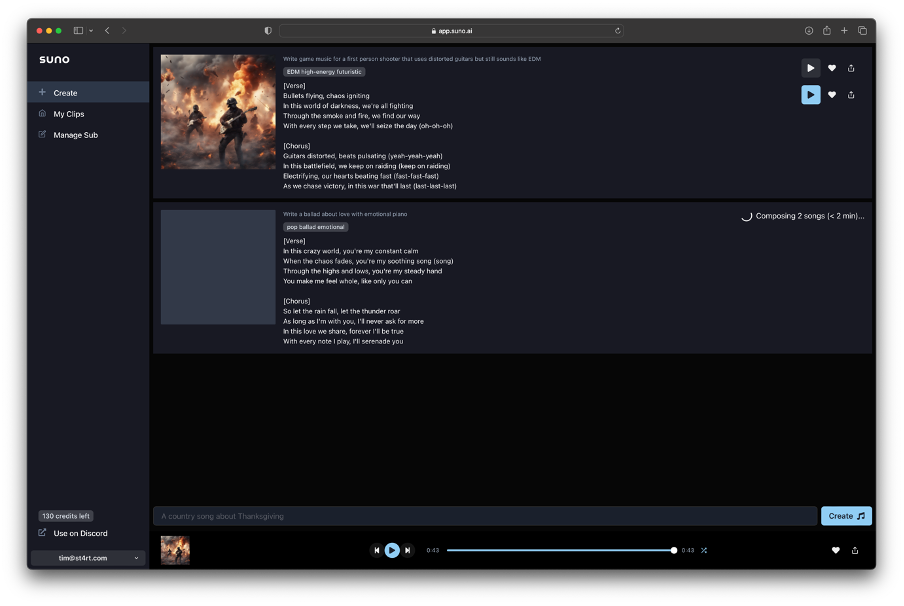

I next attempted a different input into Suno to see what it would bring up. This time I used “Write a ballad about love with emotional piano” (Figure 3).

Again, I was impressed with the musical quality of what it brought back. While the audio quality is objectively awful, the music is not bad (Suno.ai: Version 1 – Ballad). The sound quality is low fidelity like it has been created using technologies from the early 2000’s. I can only surmise that for the training data for this GAI used tracks like Liona Lewis “Bleeding Love” (Leona Lewis, 2007) being trained in the AI as a ballad. That the platform didn’t give me back a simpler piano and vocal arrangement. The vocals drift in pitch, and the again the lyrics are forgettable. However, when the drums come in there is the knowledge that the training data understands that the listener needs a build-up to keep aural interest. With better lyrics, I believe that this track could work.

With both versions using the same lyrics, I’ll move on to the music. The second version (Suno.ai: Version 2 – Ballad) it created is interesting, it starts better, but it gets a little boring. Halfway though, it does increase the instrumentation, but the piano is a little boring.

In terms of creativity, Suno is a great jumping point but limited in its potential. Mainly, as you cannot build a full track. As a tool for removing barriers to knowledge and skill, it would work for YouTube shorts but not for somebody looking to release a track. From a music producers’ perspective, I can see this as a jumping off point. Would I use this in my creative practice? Probably not, mainly as using stem separators, as Charlie Harding and Ian Kimmel did, requires a lot of effort afterwards in terms of processing the samples to get them to sound any good.

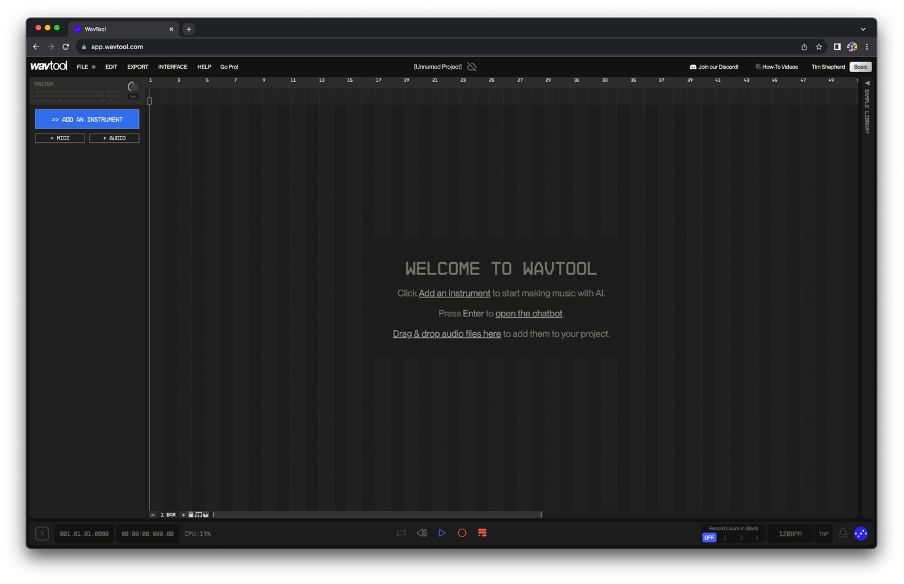

Next, I headed to wavtool.com to look at where they say “With WavTool’s Conductor AI, you can easily create instruments and tracks to back the thing you want to focus on – yourself.” (Wavtool, no date). WavTool is a browser-based Digital Audio Workstation (DAW) that has a chatbot driven AI interface and GAI composition technologies built in (Figure 4).

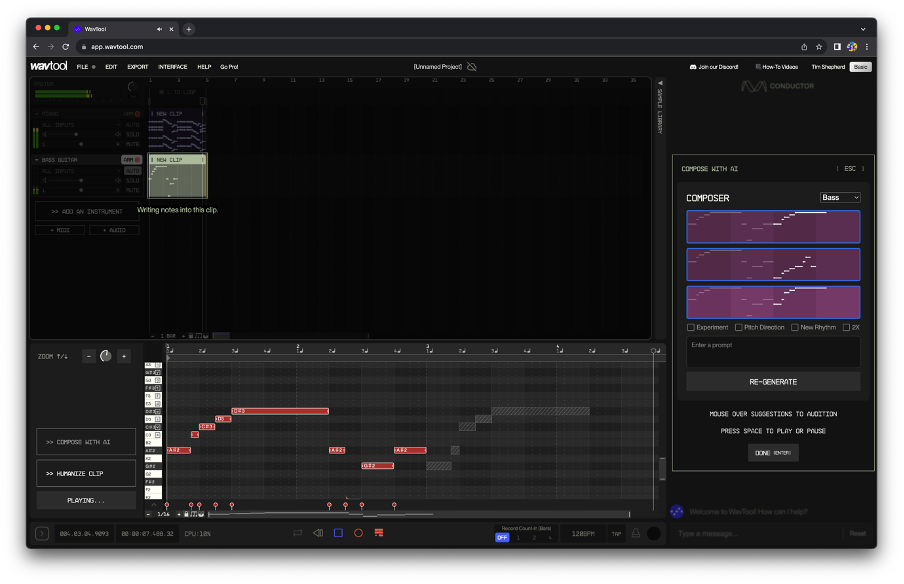

I started by adding an instrument, and then using the “Compose with AI” feature to generate ideas (Figure 5).

The final output (Wavtool: Version 1 – Without using chat function) is objectively awful. The instruments sound like cheap Casio keyboard sounds from the 90s, and while that may be a creative choice, it isn’t what I was after. However, there are some good parts to it. I will say that the chord progression and the rhythm are not something that I would have come up with myself, and while I didn’t like the instrumentation this platform does allow you to edit what is generated, change sounds (none on the platforms are able to be a complete solution), and edit that is there down to individual notes. Then there is the option to export the tracks or the entire project into midi or audio. However, if you export to MIDI or audio, then this platform is about idea generation and not full production. Which, considering the sounds being so bad, it makes sense.

The first platform Suno created tracks that sounded reasonable, but you couldn’t edit them without using other tools and platforms and most likely an actual DAW. While WavTool was very flexible and did generate some interesting ideas (Wavtool: Version 2 – Guided by chat function), but the results from the DAW itself sounded basic and amateur. It was time to look for another platform.

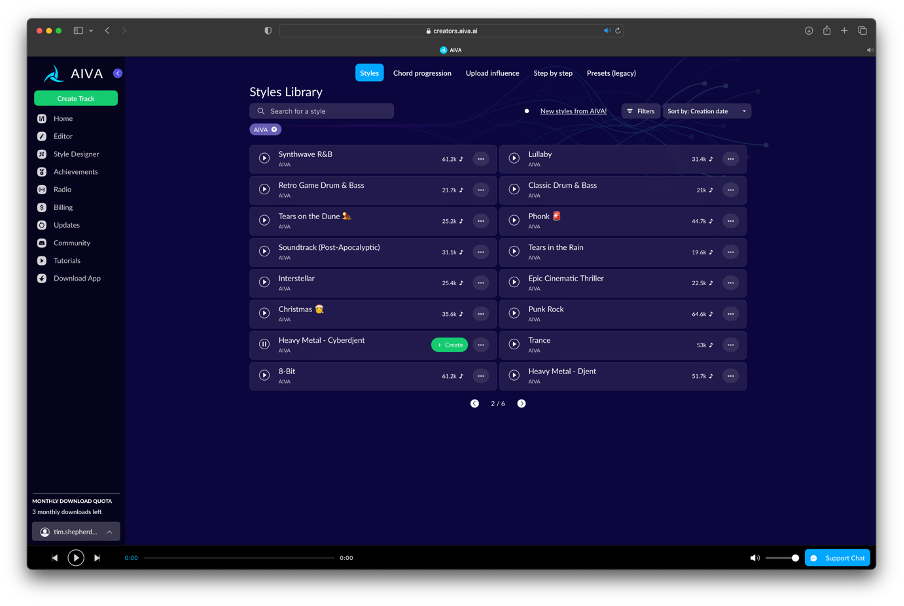

I decided on using AIVA.ai. The reason to use this platform was because it has so, so, so many YouTube videos on the subject. I started out with the idea of trying to have AVIA replicate the first-person shooter that Suno created with that simple text prompt. However, AVIA does not work that way, on this platform you pick a genre and can create variations of it (Figure 6). Here is what it created (AIVA.ai: Version 1 – Based of CyberDjent (close to FPS game)).

I chose the “Cyberdjent”, kind of a heavy guitar with an EDM crossover was what I was after. What I got was disappointing. The output sounds like a MIDI file from the early 2000s. The sounds, like for WavTool, are quite basic and don’t sound great. But there is an editor, and you can export MIDI for further processing and changing the instruments in a DAW. What is interesting in this platform is the ability to utilise any previous track that you have generated and modified to create a new track using the original track as an influence. This would be a great way to create variety in a track.

What is working and what is a stumbling block?

This is where it gets tricky. As a music producer with extensive knowledge of computer hardware, software, and programming, for me these tools offer creative opportunities. As tools they can help to inspire creative thinking. While the output of the platforms isn’t perfect, it could lead me down a path that I wasn’t originally looking at. For me, this is the promise of GAI, a co-pilot that can show me things I may not have thought of. As Charlie says: “And, you know, it’s really fun getting starter ideas and little things that I personally wouldn’t think of and pulling those in. But using it for the entire process was very inhibiting.” (Pierce, 2023)

If I was somebody without a level of music production knowledge, the promise of GAI doesn’t currently live up to the hype. With Suno, the output is quite polished musically speaking, but you cannot complete a full finished track with intro and outro and bridge. With WavTool, you cannot finish a track unless it’s Vapour Wave or very 80’s synth style, and the same for AVIA.ai.

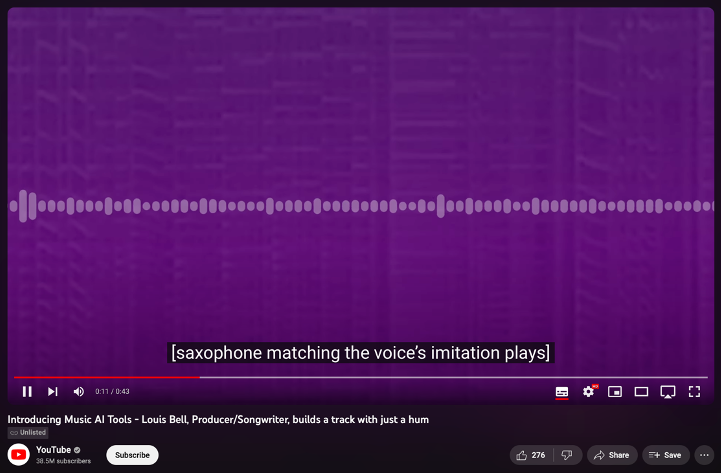

There is a new tool that Google are about to launch called Dream Track (Cohen, L and Reid, T, 2023) that from the promotional videos looks like it is made to remove all the stumbling blocks that I can see. Google has created a system that from a text prompt will create 30 second tracks that utilise the AI generated voices of Alec Benjamin, Charlie Puth, Charli XCX, Demi Lovato, John Legend, Papoose, Sia, T-Pain, and Troye Sivan (Google, 2023). The software will also allow creators to create melodic lines from hummed melodies, transforming them into for example a saxophone (Figure 7). This is a similar technology to the Vochlea Dubler which generates MIDI data from a person voice (Vochlea Music, 2023). However, Google/Deepmind’s version goes further and can take a person humming to create an orchestral arrangement (Google DeepMind, 2023). This last example is one of the most impressive technology demos that I have seen. From a single melody line creating an interesting orchestral piece is remarkable.

This technology, powered by Google DeepMind’s Lyria, will open more creative avenues for creators, without the need for specialist music production knowledge. As this technology has only been just announced, and not yet launched, it is impossible to tell if this platform will live up to the videos shown.

The one thing that Google/YouTube do bring up, and it’s important for YouTube as their user terms have recently been updated is that all AI generated content needs to be flagged as such. To this end they will use a technology called “SynthID” which digitally watermark all generated content which uses a version of audio steganography (Google DeepMind, 2023). YouTube have even gone as far as creating an AI framework with three overriding principles, the second of which is “AI is ushering in a new age of creative expression, but it must include appropriate protections and unlock opportunities for music partners who decide to participate.” (Google DeepMind, 2023).

Conclusion

Currently, with the example of both my own research and the research done on The Vergecast, without a good knowledge of music production you won’t get release ready tracks with the current GAI platforms.

So, is GAI a threat to creativity? No, I don’t think so as even though GAI won’t currently remove all barriers to entry for aspiring creators it can provide a starting point for a track or even suggest variations to your work to create variety. As with all technology, it is a tool and is only really limited by what you attempt to use it for. With GAI, the main limitation from the research I did indicate that the training data influenced the work generated. This leads me to the question: If everybody is using GAI that is trained on the same data, will the music generated become more homogenized? Obviously, this is a large topic for research on its own, and not the focus of this paper.

I personally will embrace the creative opportunities that it can provide. Though I believe that to really exploit those creative opportunities with current technology you will need to have some knowledge of music production to gain the most benefit. Ways of working will be affected for the better, and the barriers to entry will fall, but creativity and its application, will be helped, not hindered, by GAI. On the current trajectory I believe that we will have GAI that will lower the barriers to producing music, but I think that the next 10 years will be an exciting time for the technology catch up with the promise and inspire the next generation of artists to create their own work. I can’t wait to experience it.

References

Barreau, P. (2020) Aiva, AIVA, the AI Music Generation Assistant. Available at: https://www.aiva.ai/ (Accessed: 28 October 2023).

Cohen, L. and Reid, T. (16 November 2023) An early look at the possibilities as we experiment with AI and Music. Available at: https://blog.youtube/inside-youtube/ai-and-music-experiment/ (Accessed: 22 November 2023)

Eno, B. (1996) A Year With Swollen Appendices. London: Faber and Faber.

Gamble, L. (2023) ‘Dual Space – Lee Gamble’, in M. Voix (ed.) Spectres IV: A thousand voices. London, UK: Shelter Press, pp. 39–49.

Google (16 November 2023) Transforming the future of music creation. Available at: https://deepmind.google/discover/blog/transforming-the-future-of-music-creation/ (Accessed: 22 November 2023)

Google DeepMind (2023) Introducing Music AI Tool – Transforming singing into an orchestral score. Available at: https://www.youtube.com/watch?v=aC8I2YvL6Uo (Accessed: 22 November 2023)

Leona Lewis (2007) ‘Bleeding Love’, Spirit. Available at: Apple Music (Accessed: 18 December 2023).

Marr, B. (27 June 2023) Boost Your Productivity with Generative AI. Available at: https://hbr.org/2023/06/boost-your-productivity-with-generative-ai (Accessed: 20 December 2023)

Pierce, D. (2023) The Vergecast: Episode 2: We tried to make a hit song with only AI tools — and it got messy[Podcast]. 18 September 2023. Available at: https://www.theverge.com/23878445/ai-music-tools-magenta-suno-chatgpt-vergecast (Accessed: 28 October 2023).

Vochlea Music (2023) Dubler MIDI Capture – Full Walkthrough – December 2023. Available at: https://www.youtube.com/watch?v=wcuDeFbTPYo (Accessed: 5 January 2024)

Wavtool (no date) WavTool. Available at: https://wavtool.com/ (Accessed: 28 October 2023).

Watch the Sound: AutoTune (30 July 2021) Tremolo Productions. Available at: Apple TV+ (Accessed: 12 October 2023).

Bibliography

Barreau, P. (2020) Aiva, AIVA, the AI Music Generation Assistant. Available at: https://www.aiva.ai/ (Accessed: 28 October 2023).

Boomy (no date) Make generative music with artificial intelligence, Boomy. Available at: https://boomy.com/(Accessed: 28 October 2023).

Chow, A.R. (2020) Musicians using AI to create otherwise impossible new songs, Time. Available at: https://time.com/5774723/ai-music/ (Accessed: 28 October 2023).

Cohen, L and Reid, T (16 November 2023) An early look at the possibilities as we experiment with AI and Music. Available at: https://blog.youtube/inside-youtube/ai-and-music-experiment/ (Accessed: 22 November 2023)

Eno, B. (1996) A Year With Swollen Appendices. London: Faber and Faber.

Gamble, L. (2023) ‘Dual Space – Lee Gamble’, in M. Voix (ed.) Spectres IV: A thousand voices. London, UK: Shelter Press, pp. 39–49.

Google (16 November 2023) Transforming the future of music creation. Available at: https://deepmind.google/discover/blog/transforming-the-future-of-music-creation/ (Accessed: 22 November 2023)

Google DeepMind (2023) Introducing Music AI Tool – Transforming singing into an orchestral score. Available at: https://www.youtube.com/watch?v=aC8I2YvL6Uo (Accessed: 22 November 2023)

Lamarre, C. (2023) Recording Academy says AI-generated Drake & The Weeknd Song isn’t Grammy eligible after all, Billboard. Available at: https://www.billboard.com/music/awards/ai-generated-drake-weeknd-song-not-grammy-eligible-harvey-mason-jr-1235409036/ (Accessed: 28 October 2023).

Leona Lewis (2007) ‘Bleeding Love’, Spirit. Available at: Apple Music (Accessed: 18 December 2023).

Marr, B. (27 June 2023) Boost Your Productivity with Generative AI. Available at: https://hbr.org/2023/06/boost-your-productivity-with-generative-ai (Accessed: 20 December 2023)

Pierce, D. (2023) The Vergecast: Episode 2: We tried to make a hit song with only AI tools — and it got messy[Podcast]. 18 September 2023. Available at: https://www.theverge.com/23878445/ai-music-tools-magenta-suno-chatgpt-vergecast (Accessed: 28 October 2023).

Tao (2021) About the verbasizer, Verbasizer. Available at: https://verbasizer.com/about.php (Accessed: 28 October 2023).

Vochlea Music (2023) Dubler MIDI Capture – Full Walkthrough – December 2023. Available at: https://www.youtube.com/watch?v=wcuDeFbTPYo (Accessed: 5 January 2024)

Wavtool (no date) WavTool. Available at: https://wavtool.com/ (Accessed: 28 October 2023).

Watch the Sound: AutoTune (30 July 2021) Tremolo Productions. Available at: Apple TV+ (Accessed: 12 October 2023).